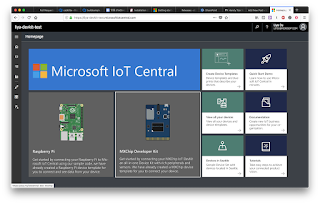

New features for Azure IoT Central

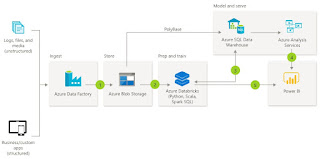

Can your IoT solution grow with you? Are you protecting data on devices and in the cloud? Azure IoT Central is built with the properties of highly secure and scalable IoT solutions. New Azure IoT Central features available: 1. 11 new industry-focused application templates to accelerate solution builders across retail, healthcare, government, and energy industries. 2. Public APIs to access Azure IoT Central features for device modelling, provisioning, lifecycle management, operations, and data querying. 3. Management for edge devices and IoT Edge module deployments. 4. Seamless device connectivity with IoT Plug and Play. 5. Application export to enable application repeatability. 6. Extensibility from no/low code actions to data export to Azure PaaS services. 7. Manageability and scale through multitenancy for both device and data sovereignty without sacrificing manageability. 8. User access cont...